Updated 10 June 2025 at 00:56 IST

iOS 26 to Let You Look Up What's On Your iPhone Screen With Visual Intelligence

Apple Intelligence's Visual Intelligence has gained a special feature and it allows you to search things you see on your screen.

- Tech News

- 2 min read

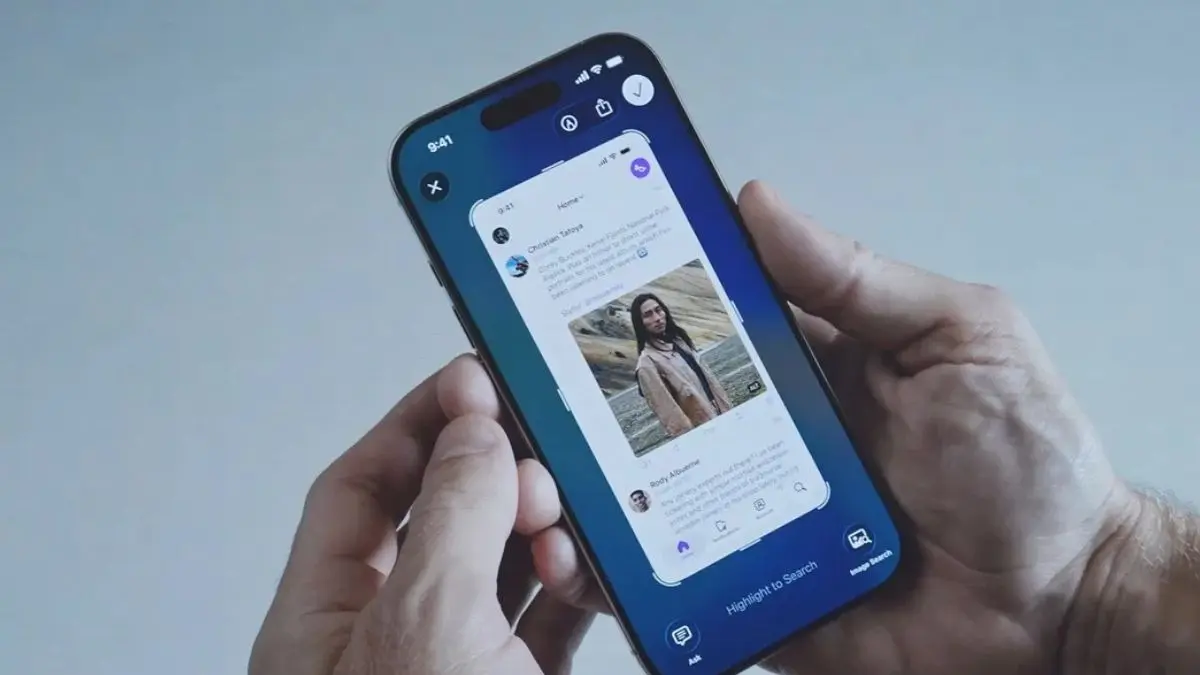

Apple's biggest iOS redesign in years has not come alone. At the Worldwide Developers Conference (WWDC) 2025, Apple announced that Visual Intelligence, a major component of Apple Intelligence, can now scan what is on your iPhone's screen and let you look it up online, and even ask ChatGPT about. In other words, if you see something on your screen and want to know more about it or just find it online to purchase later, Visual Intelligence will let you do that without needing you to download the image. But this is just one of many applications of the new Visual Intelligence capability.

“Users can ask ChatGPT questions about what they're looking at on their screen to learn more, as well as search Google, Etsy, or other supported apps to find similar images and products,” said Apple.

It works similarly to Circle to Search but without the circling part. In other words, you can trigger Visual Intelligence through the Camera Control or the Action Button on models like the iPhone 16e or iPhone 15 Pro and ask it: “what's on my screen?” — followed by more contextual questions like “could you search this product on Google?”

Apart from that, the new Visual Intelligence feature can also recognise when a user is looking at an event on the screen and add it as a calendar event with the exact time and place. To access the feature, you have to take a screenshot like you do. After a screenshot is taken, your iPhone will ask you if you want to save the screenshot and edit it or if you want to search using Apple Intelligence.

Advertisement

The new functionality is part of iOS 26, which brings a new Liquid Glass design language. It brings new app icons, new animations, and translucent windows and tabs inspired by visionOS.

Advertisement

Published By : Shubham Verma

Published On: 9 June 2025 at 23:28 IST